There are not much infomation on the internet on how to visualize your embeddings with Tensorboard. I’ve had a hard time trying to make it work properly. Although there’re some tutorial on the internet but most of them are for Tensorflow 1 and I didn’t find the Tensorboard guide documentation page much help. So here’s how I make it works and hopefully it’ll help you too. But before we continue, I’ll assume you already have an image dataset with labels and a model ready.

Okay let’s dive in. Here’s what we’ll need to do:

- Use our

modelto predict the training set and take the output of embedding layer. - Create a sprite image for projecting image on Tensorboard.

- Save the labels (and others if avalable) to a

metadata.tsvfile - Save a tensorflow checkpoint with embedding vectors as

tf.Variable

All these files must be saved in the same folder since later we’ll call

Tensorboard and specify this directory as log_dir for it to find the necessary

files.

Feature extract model

First create your model and load the appropriate weight. Mine is just a pretrain ResNet50 with an additional dense layer with 64 neurons as my embedding layer.

model = create_model(cfg)

log_dir = 'embedding_log'

ckpt_path = tf.train.latest_checkpoint('./checkpoints/ckpt0')

if ckpt_path is not None:

print("Load ckpt from {}".format(ckpt_path))

model.load_weights(ckpt_path).expect_partial()

else:

raise ValueError("No ckpt found")

Next we’ll save the model embedding vectors for future runs. Here I’ll loop through the

train dataset, predict on a batch of images and then save the embedding vectors, labels and

images to their appropriate list. If you have the labels and images ready, you

can just call model.predict(train_dataset) directly. It’ll process the whole

dataset and output an embedding matrix. This way, you don’t have to loop through

the dataset like I do here.

image_list = []

label_list = []

embedding_list = []

for batch in tqdm(train_dataset, total=steps_per_epoch + 1):

images, labels = batch[0]

embeddings = model.predict((images, labels))

embedding_list.append(embeddings)

label_list.append(np.argmax(labels, axis=1))

image_list.append(images.numpy() * 255)

embeddings = np.concatenate(embedding_list, axis=0)

labels = np.concatenate(label_list, axis=0)

images = np.concatenate(image_list, axis=0)

# Should save these for future runs

# Use an if-else block to check if the files exist

# So you don't have to loop through the dataset every run.

np.save(os.path.join(log_dir, 'embeddings.npy'), embeddings)

print("Saved embeddings to {}".format(os.path.join(log_dir, 'embeddings.npy')))

np.save(os.path.join(log_dir, 'labels.npy'), labels)

print("Saved labels to {}".format(os.path.join(log_dir, 'labels.npy')))

np.save(os.path.join(log_dir, 'images.npy'), images)

print("Saved images to {}".format(os.path.join(log_dir, 'images.npy')))

print("Embeddings shape: {}".format(embeddings.shape))

print("Labels shape: {}".format(labels.shape))

print("Images shape: {}".format(images.shape))

>>> Embeddings shape: (100, 64)

>>> Labels shape: (100, )

>>> Images shape: (100, 256, 256, 3)

$ ls -alh embedding_log/

drwxr-xr-x 3 nttai nttai 4.0K Jun 11 15:00 .

drwxrwxr-x 18 nttai nttai 4.0K Jun 10 10:23 ..

-rw-r--r-- 1 nttai nttai 7.5M Jun 10 10:24 embeddings.npy

-rw-r--r-- 1 nttai nttai 45G Jun 10 10:27 images.npy

-rw-r--r-- 1 nttai nttai 481K Jun 10 10:27 labels.npy

Sprite image

Next we’ll have to create a sprite image so that when we project its embedding vector onto three dimensional space, Tensorboard will use these thumbnails we create instead of just a bunch of dots. Just make sure that the indexes stay the same.

Keep in mind that Tensorboard only accept sprite image with maximum dimension of

8192x8192 pixels so you should select only a subset of the training set. Here,

since my train_dataset only have 100 images, I’ll save each thumbnail with

size of 256x256 pixels.

def images_to_sprite(images, log_dir, size):

one_square_size = int(np.ceil(np.sqrt(len(images))))

master_width = size * one_square_size

master_height = size * one_square_size

spriteimage = Image.new(

mode='RGBA',

size=(master_width, master_height),

color=(0, 0, 0, 0)

)

print("Writing sprite image to {}".format(

os.path.join(log_dir, 'sprite.jpg')))

for count, image in tqdm(enumerate(images), total=len(images)):

div, mod = divmod(count, one_square_size)

h_loc = size * div

w_loc = size * mod

image = image.squeeze()

image = Image.fromarray(image.astype(np.uint8)).resize((size, size))

spriteimage.paste(image, (w_loc, h_loc))

spriteimage.convert('RGB').save(f'{log_dir}/sprite.jpg', transparency=0)

thumbnail_size = 256

images_to_sprite(images, log_dir, thumbnail_size)

$ ls -alh embedding_log/

drwxr-xr-x 3 nttai nttai 4.0K Jun 11 15:00 .

drwxrwxr-x 18 nttai nttai 4.0K Jun 10 10:23 ..

-rw-r--r-- 1 nttai nttai 7.5M Jun 10 10:24 embeddings.npy

-rw-r--r-- 1 nttai nttai 45G Jun 10 10:27 images.npy

-rw-r--r-- 1 nttai nttai 481K Jun 10 10:27 labels.npy

-rw-r--r-- 1 nttai nttai 144K Jun 11 15:00 sprite.jpg

Metadata to tsv file

If you have class names or image ids and you want to display it rather than its class label you can write those as another column. Note that when there’re more than one column, you’re required to write the column labels as the first row, separated by tabs like so.

label name image_path

1 0 cat images/cat/1.jpg

2 1 dog images/dog/1.jpg

3 1 dog images/dog/2.jpg

... ... ... ...

Since I only have image labels, I’ll use the writerows() method of python

csv package.

with open(f'{log_dir}/metadata.tsv', 'w') as fw:

csv_writer = csv.writer(fw, delimiter="\t")

csv_writer.writerows(np.expand_dims(labels, axis=1))

print("Labels saved to {}".format(os.path.join(log_dir, 'metadata.tsv')))

Values inside metadata.tsv should look something like this.

2304

1 865

2 3224

3 1440

4 3122

5 4285

6 334

7 5282

8 1744

9 1026

10 6774

... ...

$ ls -alh embedding_log/

drwxr-xr-x 3 nttai nttai 4.0K Jun 11 15:00 .

drwxrwxr-x 18 nttai nttai 4.0K Jun 10 10:23 ..

-rw-r--r-- 1 nttai nttai 7.5M Jun 10 10:24 embeddings.npy

-rw-r--r-- 1 nttai nttai 45G Jun 10 10:27 images.npy

-rw-r--r-- 1 nttai nttai 481K Jun 10 10:27 labels.npy

-rw-r--r-- 1 nttai nttai 585 Jun 11 15:00 metadata.tsv

-rw-r--r-- 1 nttai nttai 144K Jun 11 15:00 sprite.jpg

Embedding checkpoint

Now create a tf.Variable with the embeddings we extracted above and save it

to the same log_dir directory.

embeddings = tf.Variable(embeddings, name='embeddings')

checkpoint = tf.train.Checkpoint(embedding=embeddings)

checkpoint.save(os.path.join(log_dir, "embedding.ckpt"))

$ ls -alh embedding_log/

drwxr-xr-x 3 nttai nttai 4.0K Jun 11 15:00 .

drwxrwxr-x 18 nttai nttai 4.0K Jun 10 10:23 ..

-rw-r--r-- 1 nttai nttai 89 Jun 11 15:00 checkpoint

-rw-r--r-- 1 nttai nttai 13K Jun 11 15:00 embedding.ckpt-1.data-00000-of-00001

-rw-r--r-- 1 nttai nttai 264 Jun 11 15:00 embedding.ckpt-1.index

-rw-r--r-- 1 nttai nttai 7.5M Jun 10 10:24 embeddings.npy

-rw-r--r-- 1 nttai nttai 45G Jun 10 10:27 images.npy

-rw-r--r-- 1 nttai nttai 481K Jun 10 10:27 labels.npy

-rw-r--r-- 1 nttai nttai 585 Jun 11 15:00 metadata.tsv

-rw-r--r-- 1 nttai nttai 144K Jun 11 15:00 sprite.jpg

Projector config

The last thing we’ll need is the Tensorboard projector config file which specify:

-

The

tensor_name. Don’t useembeddings.name, hardcode this line like below. Otherwise it’ll only project a dot for each image. -

Name of our metadata file.

-

Name of our sprite image.

-

And specify the size of each thumbnail. Tensorboard will use this to split up each of our thumbnails using the sprite image.

from tensorboard.plugins import projector

config = projector.ProjectorConfig()

embedding = config.embeddings.add()

# NOTE: Hardcode this line

embedding.tensor_name = 'embedding/.ATTRIBUTES/VARIABLE_VALUE'

embedding.metadata_path = 'metadata.tsv'

embedding.sprite.image_path = 'sprite.jpg'

embedding.sprite.single_image_dim.extend([thumbnail_size, thumbnail_size])

projector.visualize_embeddings(log_dir, config)

projector_config.pbtxt file should contain these fields.

embeddings {

tensor_name: "embedding/.ATTRIBUTES/VARIABLE_VALUE"

metadata_path: "metadata.tsv"

sprite {

image_path: "sprite.jpg"

single_image_dim: 256

single_image_dim: 256

}

}

$ ls -alh embedding_log/

drwxr-xr-x 3 nttai nttai 4.0K Jun 11 15:00 .

drwxrwxr-x 18 nttai nttai 4.0K Jun 10 10:23 ..

-rw-r--r-- 1 nttai nttai 89 Jun 11 15:00 checkpoint

-rw-r--r-- 1 nttai nttai 13K Jun 11 15:00 embedding.ckpt-1.data-00000-of-00001

-rw-r--r-- 1 nttai nttai 264 Jun 11 15:00 embedding.ckpt-1.index

-rw-r--r-- 1 nttai nttai 7.5M Jun 10 10:24 embeddings.npy

-rw-r--r-- 1 nttai nttai 45G Jun 10 10:27 images.npy

-rw-r--r-- 1 nttai nttai 481K Jun 10 10:27 labels.npy

-rw-r--r-- 1 nttai nttai 585 Jun 11 15:00 metadata.tsv

-rw-r--r-- 1 nttai nttai 197 Jun 11 15:00 projector_config.pbtxt

-rw-r--r-- 1 nttai nttai 144K Jun 11 15:00 sprite.jpg

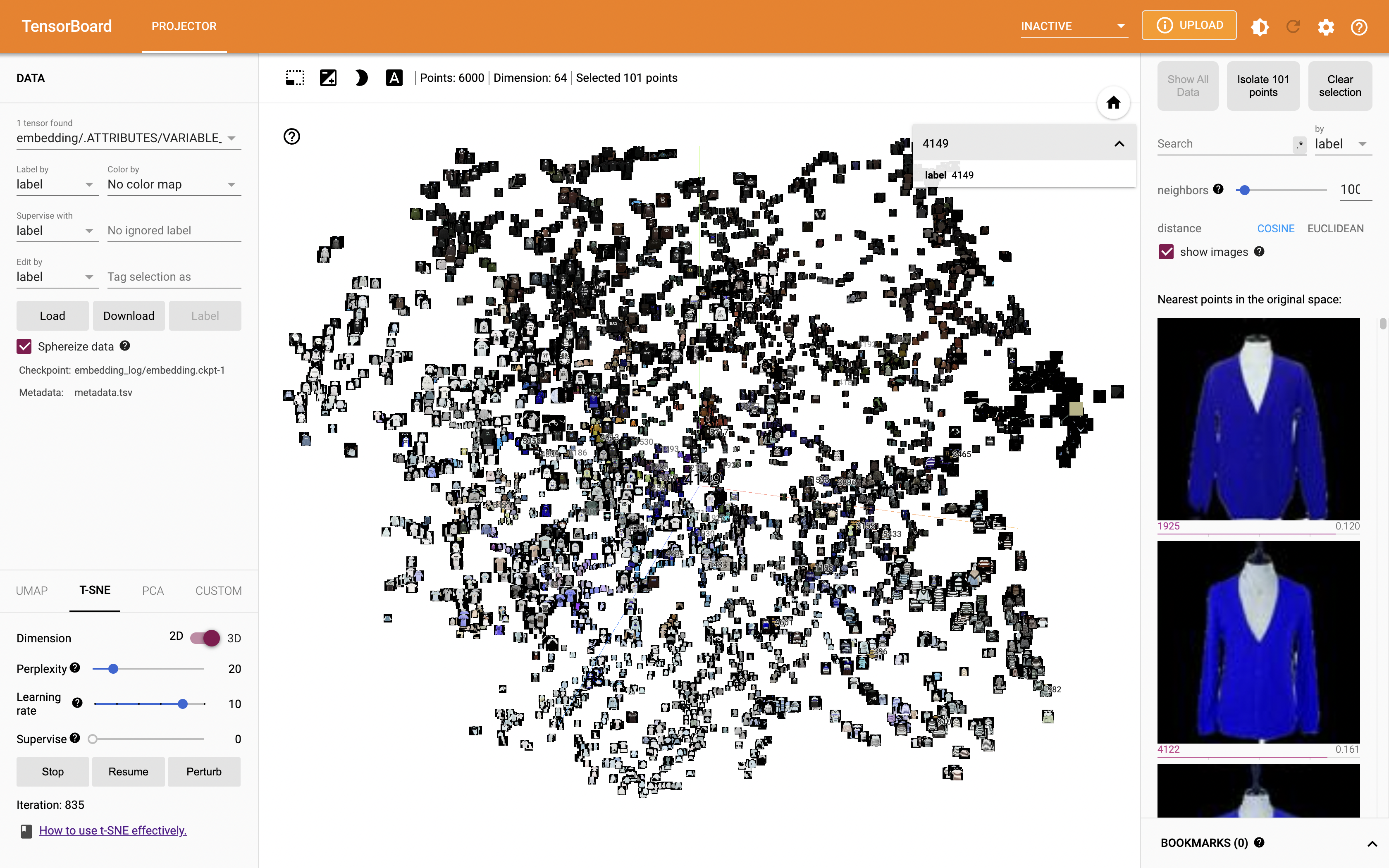

The result

Now that we have all required files, let’s run Tensorboard in this directory and checkout the result.

$ tensorboard --logdir embedding_log/

...

TensorBoard 2.9.0 at http://localhost:6006/ (Press CTRL+C to quit)

Open your browser and go to http://localhost:6006 and it should look some thing like this.